This post is also available in:

![]() English

English

As of today (October 2019), there is still no internal capability in Oracle Cloud Infrastructure to export the metadata of all the billing information you have in your OCI to a CSV, JSON or any other file that would allow us to easily import in other tools to create our own graphs.

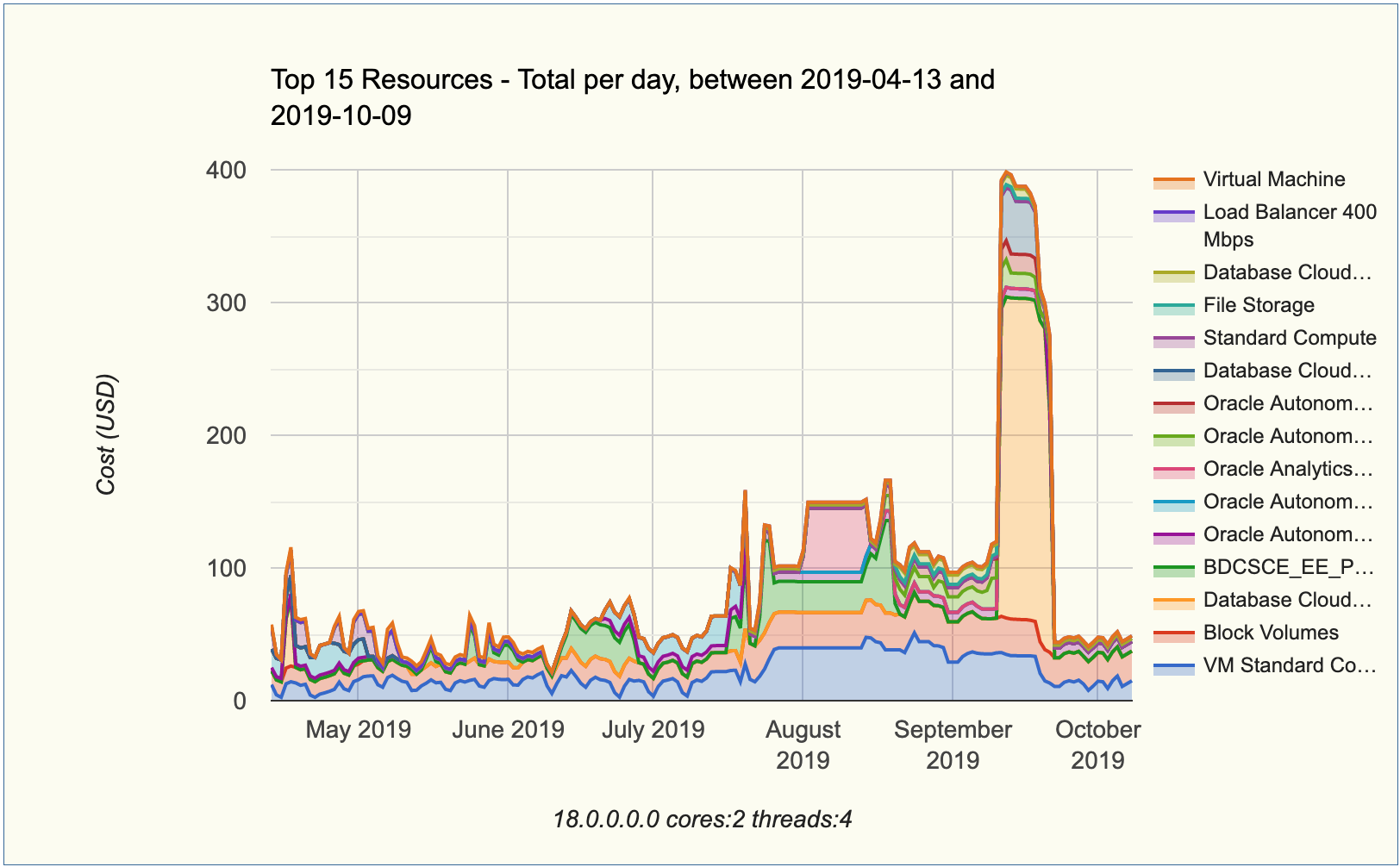

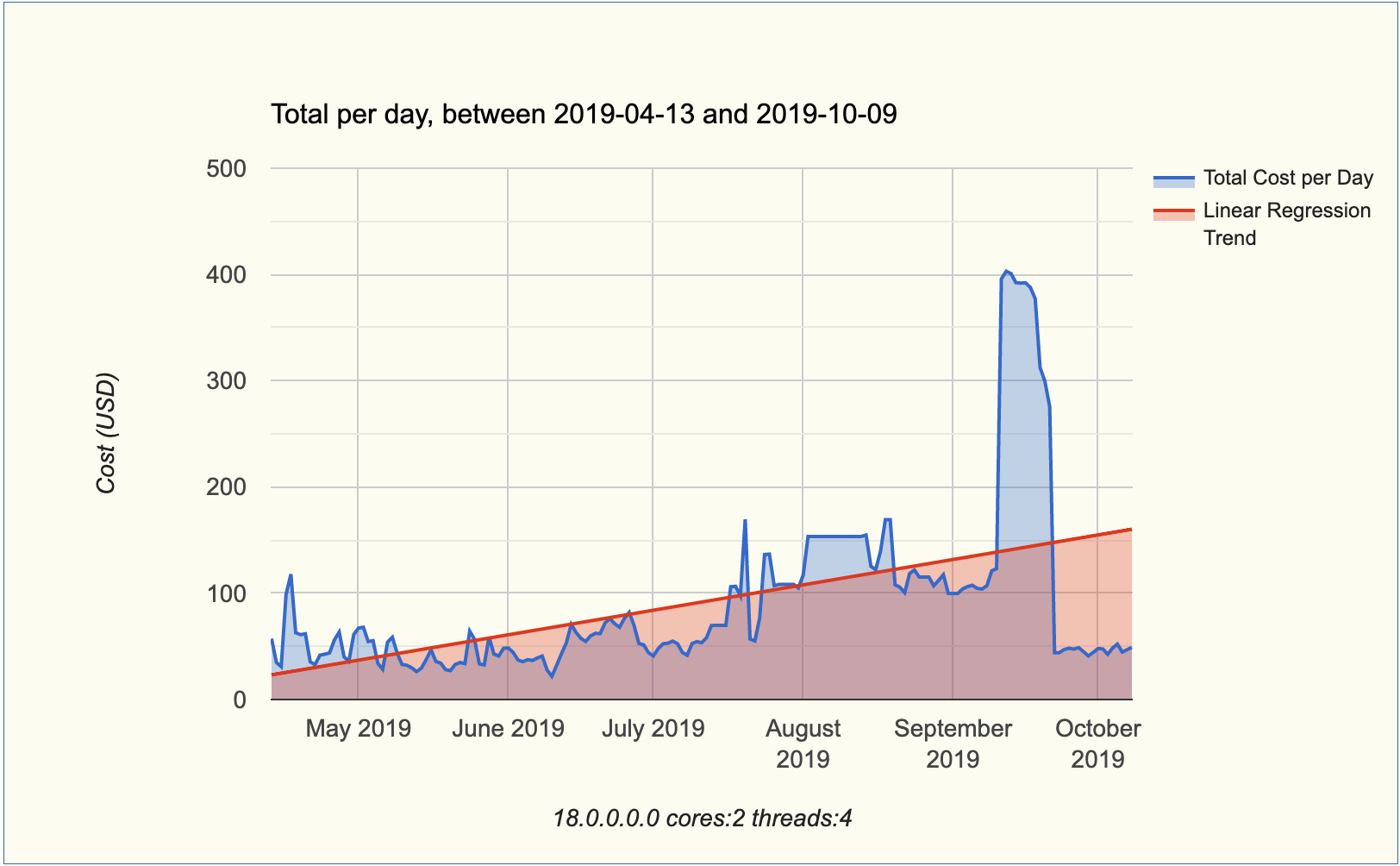

Meanwhile, I have some customers that work with OCI and have the requisite to create their own costs graphs and generate some linear regression estimates of future costs, like the graphs below:

Expenses by resource type per day.

Total Expenses per day and Linear Regression trend.

To make this possible, I had to find a way to extract all the billing information from OCI. As the steps to manually export all the billing data are not trivial, I've developed a script that will do all the work.

The script is public available at my github oci-scripts page: oci_json_billing.sh

How does it work?

The oci_json_billing.sh is a 100% public bash shell script tool that extracts all the Oracle Cloud Infrastructure billing information into JSON files and compact in a single zip output.

- It uses cURL and jq in the backend.

- Each execution of oci_json_billing.sh may take several minutes, however, it will not impact the OCI performance.

- Execution time is long since oci_json_billing.sh makes use of more than a hundred single-threaded sequentially-executed curl calls to produce its output.

- For more information about oci_json_billing.sh, refer to content and links at https://github.com/dbarj/oci-scripts/blob/master/oci_json_billing.sh

Pre-requisites

As already said, oci_json_billing.sh depends that you have a bash shell, cURL and jq.

Bash Shell

Bash is the GNU Project's shell. It’s available on most UNIX operating systems, like Linux and Mac.

cURL

cURL is a computer software project providing a library and command-line tool for transferring data using various protocols. It's also available on most UNIX operating systems, like Linux and Mac.

JQ

JQ stands for Json Query, it’s a parser that is required to generate and process JSON files generated by cURL. It’s available at https://github.com/stedolan/jq/releases

[user@localhost ~]$ cd ~/bin [user@localhost ~]$ wget -O jq https://github.com/stedolan/jq/releases/download/jq-1.5/jq-linux64 100%[===========================================>] 2.89M 862KB/s in 3.8s 2018-09-17 18:55:00 (776 KB/s) - ‘jq’ saved [3027945/3027945] [user@localhost ~]$ chmod +x jq [user@localhost ~]$ export PATH=$PATH:`pwd` [user@localhost ~]$ which jq /Users/myuser/bin/jq

Some linux distributions also provides JQ straight from the yum repository.

How to run it?

1 - Download the latest version of the public tool oci_json_billing.sh from https://raw.githubusercontent.com/dbarj/oci- scripts/master/oci_json_billing.sh

2 - Copy to server (into any directory) where it will be executed. Optionally, you

can download it directly to the server using wget:

[user@localhost ~]$ wget https://raw.githubusercontent.com/dbarj/oci-scripts/master/oci_json_billing.sh 100%[============================>] 26.39K --.-KB/s in 0.03s 2018-10-10 11:00:21 (979 KB/s) - ‘oci_json_billing.sh’ saved [27027/27027] [user@localhost ~]$ chmod +x oci_json_billing.sh

3 - Execute shell script oci_json_billing.sh:

[user@localhost ~]$ oci_json_billing.sh To run this tool, you must export: - CLIENT_USER, CLIENT_PASS, CLIENT_DOMAIN OR - CLIENT_ID, CLIENT_SECRET, CLIENT_DOMAIN

Note this tool will connect in your Oracle Cloud tenancy with and IDCS Application or User to extract all the billing into a JSON file. To create and account to handle that, check this article: https://www.dbarj.com.br/en/2019/06/creating-read-only-account-for-rest-billing-access-on-oracle-cloud/

4 - Export the required set of variables and re-run the tool:

[user@localhost ~]$ export CLIENT_ID="ce4e87e6d7e08af097cea781bca899cd" [user@localhost ~]$ export CLIENT_SECRET="cd78d7ab-a4b4-4fd6-269b-ebfe60cbe86c" [user@localhost ~]$ export CLIENT_DOMAIN="idcs-63d482e2accd67ba76bd767cd687c6b" [user@localhost ~]$ oci_json_billing.sh Usage: oci_json_billing.sh <option> [begin_time] [end_time] <option> - Execution Scope. [begin_time] (Optional) - Defines start time when exporting billing info. (Default is 90 days back) [end_time] (Optional) - Defines end time when exporting billing info. (Default is today) Valid <option> values are: - ALL - Execute json export for ALL possible options and compress output in a zip file. - accountDetails - checkQuota - cloudLimits - promotions - serviceEntitlements - serviceResources - usage - usageCost - usageCostTaggedDaily - usageCostTaggedHourly - usageTags

5 - Note you need a parameter. The 1st parameter must be either:

- ALL - Means it will scan and export all billing information found . Then it will zip the result in a single file.

- (Specific Value) - There are more than 10 other individually specific options you can pass and, in this case, it will export only the json for that object. The exported objected will be spooled to the stdout. You can optionally redirect to write in a specific file.

6 - The 2nd and 3rd parameters are optional and, if specified, defines the range of time you want to export the billing info. If omitted, the begging time will be 90 days back and the end time will be today.

Note: It's not recommended to collect billing information for a long range of data as this can take several hours to finish! If running for the first time, it's recommended keep 2nd and 3rd parameters for just a very short period.

7 - Finally, if you run it, it will also give you some warning in case you don't export HIST_ZIP_FILE.

[user@localhost ~]$ oci_json_billing.sh ALL 2019-10-01 2019-10-02 You haven't exported HIST_ZIP_FILE variable, meaning you won't keep a execution hist that can be reused on next script calls. With zip history, next executions will be much faster. It's extremelly recommended to enable it. Press CTRL+C in next 10 seconds if you want to exit and fix this. ^CTerminated: 15

It's very important and recommended to export HIST_ZIP_FILE. This parameter specifies a zip file that will store the past executions logs and jsons, so in case you re-run the tool for a given overlapped timeframe, it will only get the delta that was not yet retrieved from the cloud. All the rest will be retrieved from the historical zip file.

Execution Example

Below is a execution demo of the tool:

[user@localhost ~]$ ls -l oci_json_billing.sh -rwxr-xr-x 1 rodrigo.jorge staff 35831 Oct 10 12:30 oci_json_billing.sh [user@localhost ~]$ export HIST_ZIP_FILE="/Users/rodrigo.jorge/billing_hist.zip" [user@localhost ~]$ ./oci_json_billing.sh ALL 2019-10-01 2019-10-02 Processing "oci_cloud_serviceEntitlements.json". Processing "oci_cloud_serviceResources.json". Processing "oci_cloud_usageTags.json". Processing "oci_cloud_usageCost.json". Processing "oci_cloud_usage.json". Processing "oci_cloud_usageCostTaggedDaily.json". Processing "oci_cloud_usageCostTaggedHourly.json". Processing "oci_cloud_accountDetails.json". Processing "oci_cloud_checkQuota.json". Processing "oci_cloud_promotions.json". Processing "oci_cloud_cloudLimits.json". [user@localhost ~]$

Optionally, you can export the variable DEBUG=1 before calling oci_json_billing.sh script. You will be able to view execution steps.:

Note: DEBUG is automatically enabled when running with ALL option.

[user@localhost ~]$ export DEBUG=1

[user@localhost ~]$ ./oci_json_billing.sh ALL 2019-10-01 2019-10-02

Processing "oci_cloud_serviceEntitlements.json".

Processing "oci_cloud_serviceResources.json".

Processing "oci_cloud_usageTags.json".

...

## (In another Shell)

[user@localhost ~]$ ls -ltr

total 224

-rw------- 1 rodrigo.jorge staff 1582 Oct 10 13:14 access_token_1_file.json

-rw------- 1 rodrigo.jorge staff 1579 Oct 10 13:14 access_token_2_file.json

-rw-r--r-- 1 rodrigo.jorge staff 33983 Oct 10 13:16 oci_json_billing_20191010131412.zip

-rw-r--r-- 1 rodrigo.jorge staff 138 Oct 10 13:16 oci_json_billing_list.txt

-rw-r--r-- 1 rodrigo.jorge staff 0 Oct 10 13:16 oci_cloud_usageCostTaggedDaily.json

-rw-r--r-- 1 rodrigo.jorge staff 0 Oct 10 13:16 oci_cloud_usageCostTaggedDaily.json.err

drwxr-xr-x 59 rodrigo.jorge staff 1888 Oct 10 13:16 billing_history

-rw-r--r-- 1 rodrigo.jorge staff 51224 Oct 10 13:16 billing_hist.zip

-rw-r--r-- 1 rodrigo.jorge staff 8868 Oct 10 13:16 oci_json_billing.log

[user@localhost ~]$ tail -f oci_json_billing.log

20191010131412: BEGIN

20191010131412: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/itas/idcs-63d482e2accd67ba76bd767cd687c6b/myservices/api/v1/serviceEntitlements"

20191010131413: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/itas/idcs-63d482e2accd67ba76bd767cd687c6b/myservices/api/v1/serviceEntitlements?offset=25&limit=25"

20191010131415: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/resources/ADWC"

20191010131418: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/resources/ANALYTICS"

...

20191010131549: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/resources/SOA"

20191010131552: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/resources/SSI"

20191010131554: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/resources/Storage"

20191010131557: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/resources/VISUALBUILDERAUTO"

20191010131559: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/resources/VisualBuilder"

20191010131600: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/resources/containerpipe"

20191010131605: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/usage/cacct-1789adc89f08ea98a98c98b8989d98ef/tags?tagType=ALL"

20191010131606: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/usagecost/cacct-1789adc89f08ea98a98c98b8989d98ef?startTime=2019-10-01T00:00:00.000Z&endTime=2019-10-02T00:00:00.000Z&usageType=HOURLY&timeZone=UTC&dcAggEnabled=Y"

20191010131611: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/usage/cacct-1789adc89f08ea98a98c98b8989d98ef?startTime=2019-10-01T00:00:00.000Z&endTime=2019-10-02T00:00:00.000Z&usageType=HOURLY&timeZone=UTC"

20191010131619: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/usagecost/cacct-1789adc89f08ea98a98c98b8989d98ef/tagged?startTime=2019-10-01T00:00:00.000Z&endTime=2019-10-02T00:00:00.000Z&usageType=DAILY&timeZone=UTC&tags=ORCL%3AOCIService%3DObject%20Storage"

20191010131620: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/usagecost/cacct-1789adc89f08ea98a98c98b8989d98ef/tagged?startTime=2019-10-01T00:00:00.000Z&endTime=2019-10-02T00:00:00.000Z&usageType=DAILY&timeZone=UTC&tags=ORCL%3AOCICompartment%3Docid1.tenancy.oc1..aaaaaaaapcuyfcp6umbdlafqqrxzbmm7uvypvdnddfforof4q7jxro7obw7a"

20191010131622: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/usagecost/cacct-1789adc89f08ea98a98c98b8989d98ef/tagged?startTime=2019-10-01T00:00:00.000Z&endTime=2019-10-02T00:00:00.000Z&usageType=DAILY&timeZone=UTC&tags=ORCL%3AOCIService%3DNetworking"

...

20191010131636: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/usagecost/cacct-1789adc89f08ea98a98c98b8989d98ef/tagged?startTime=2019-10-01T00:00:00.000Z&endTime=2019-10-02T00:00:00.000Z&usageType=DAILY&timeZone=UTC&tags=ORCL%3AOCICompartmentName%3DTestCases"

20191010131637: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/usagecost/cacct-1789adc89f08ea98a98c98b8989d98ef/tagged?startTime=2019-10-01T00:00:00.000Z&endTime=2019-10-02T00:00:00.000Z&usageType=DAILY&timeZone=UTC&tags=ORCL%3AOCICompartmentName%3DMakroGroup"

20191010131639: curl -s -X GET -H "Authorization: Bearer ${CLIENT_TOKEN}" "https://itra.oraclecloud.com/metering/api/v1/usagecost/cacct-1789adc89f08ea98a98c98b8989d98ef/tagged?startTime=2019-10-01T00:00:00.000Z&endTime=2019-10-02T00:00:00.000Z&usageType=DAILY&timeZone=UTC&tags=ORCL%3AOCICompartmentName%3DMachineClone"

...

Checking output

After execution is completed, you will have an output in the format oci_json_billing_YYYYMMDDHHMISS.zip:

[user@localhost ~]$ ls -l

total 1288

-rw-r--r-- 1 rodrigo.jorge staff 492560 Oct 10 13:32 billing_hist.zip

-rw-r--r-- 1 rodrigo.jorge staff 160891 Oct 10 13:32 oci_json_billing_20191010131412.zip

[user@localhost ~]$ unzip -l oci_json_billing_20191010131412.zip

Archive: oci_json_billing_20191010131412.zip

Length Date Time Name

--------- ---------- ----- ----

70700 10-10-2019 13:14 oci_cloud_serviceEntitlements.json

283867 10-10-2019 13:16 oci_cloud_serviceResources.json

41910 10-10-2019 13:16 oci_cloud_usageTags.json

412495 10-10-2019 13:16 oci_cloud_usageCost.json

273442 10-10-2019 13:16 oci_cloud_usage.json

294048 10-10-2019 13:22 oci_cloud_usageCostTaggedDaily.json

7000743 10-10-2019 13:30 oci_cloud_usageCostTaggedHourly.json

51936 10-10-2019 13:30 oci_cloud_accountDetails.json

116190 10-10-2019 13:32 oci_cloud_checkQuota.json

17 10-10-2019 13:32 oci_cloud_promotions.json

51 10-10-2019 13:32 oci_cloud_cloudLimits.json

320 10-10-2019 13:32 oci_json_billing_list.txt

200703 10-10-2019 13:32 oci_json_billing.log

--------- -------

8746422 13 files

[user@localhost ~]$

The billing_hist.zip file, as mentioned before, will be used to store the execution logs. So, in case you re-run the script with overlapping times, it won't need to get the same information again from the Cloud using cUrl, bur rather from the zip.

To get the data, you can simply unzip the file and process it.

[user@localhost ~]$ unzip oci_json_billing_20191010131412.zip oci_cloud_usageCost.json Archive: oci_json_billing_20191010131412.zip inflating: oci_cloud_usageCost.json [user@localhost ~]$ jq -r '.data[] | select(."serviceName"=="COMPUTEBAREMETAL" and ."resourceName"=="PIC_BLOCK_STORAGE_STANDARD") | [."costs"[]."computedQuantity",."costs"[]."computedAmount",."costs"[]."unitPrice",."costs"[]."overagesFlag",."startTimeUtc",."endTimeUtc",."dataCenterId"] | @csv' oci_cloud_usageCost.json 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T00:00:00.000","2019-10-01T01:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T01:00:00.000","2019-10-01T02:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T02:00:00.000","2019-10-01T03:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T03:00:00.000","2019-10-01T04:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T04:00:00.000","2019-10-01T05:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T05:00:00.000","2019-10-01T06:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T06:00:00.000","2019-10-01T07:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T07:00:00.000","2019-10-01T08:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T08:00:00.000","2019-10-01T09:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T09:00:00.000","2019-10-01T10:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T10:00:00.000","2019-10-01T11:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T11:00:00.000","2019-10-01T12:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T12:00:00.000","2019-10-01T13:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T13:00:00.000","2019-10-01T14:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T14:00:00.000","2019-10-01T15:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T15:00:00.000","2019-10-01T16:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T16:00:00.000","2019-10-01T17:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T17:00:00.000","2019-10-01T18:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T18:00:00.000","2019-10-01T19:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T19:00:00.000","2019-10-01T20:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T20:00:00.000","2019-10-01T21:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T21:00:00.000","2019-10-01T22:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T22:00:00.000","2019-10-01T23:00:00.000","us-phoenix-1" 155.48119623655913,15.4703790255,0.0995,"N","2019-10-01T23:00:00.000","2019-10-02T00:00:00.000","us-phoenix-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T00:00:00.000","2019-10-01T01:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T01:00:00.000","2019-10-01T02:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T02:00:00.000","2019-10-01T03:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T03:00:00.000","2019-10-01T04:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T04:00:00.000","2019-10-01T05:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T05:00:00.000","2019-10-01T06:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T06:00:00.000","2019-10-01T07:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T07:00:00.000","2019-10-01T08:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T08:00:00.000","2019-10-01T09:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T09:00:00.000","2019-10-01T10:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T10:00:00.000","2019-10-01T11:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T11:00:00.000","2019-10-01T12:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T12:00:00.000","2019-10-01T13:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T13:00:00.000","2019-10-01T14:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T14:00:00.000","2019-10-01T15:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T15:00:00.000","2019-10-01T16:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T16:00:00.000","2019-10-01T17:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T17:00:00.000","2019-10-01T18:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T18:00:00.000","2019-10-01T19:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T19:00:00.000","2019-10-01T20:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T20:00:00.000","2019-10-01T21:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T21:00:00.000","2019-10-01T22:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T22:00:00.000","2019-10-01T23:00:00.000","us-ashburn-1" 216.07666465053762,21.4996281327,0.0995,"N","2019-10-01T23:00:00.000","2019-10-02T00:00:00.000","us-ashburn-1"

What about the graphs?

To generate some graphs over the json data, you can convert and import the output to some tool like Microsoft Excel and create a chart or even to Google Charts. I will give more details about that in a later article.

Hope you enjoyed. #ThanksOGB

Have you enjoyed? Please leave a comment or give a 👍!